For this part, I first wrote the functioni computeH which would take in the set of corresponding points between the two images and compute the homography matrix to transform one into the other. I then created the warp function, which would initially warp the corners to determine the output shape and shift the warped image accordingly. I would then apply the homography to all points in the original image and finally output the warped image, the translation matrix, and the points that were transformed through the combined translation/homography.

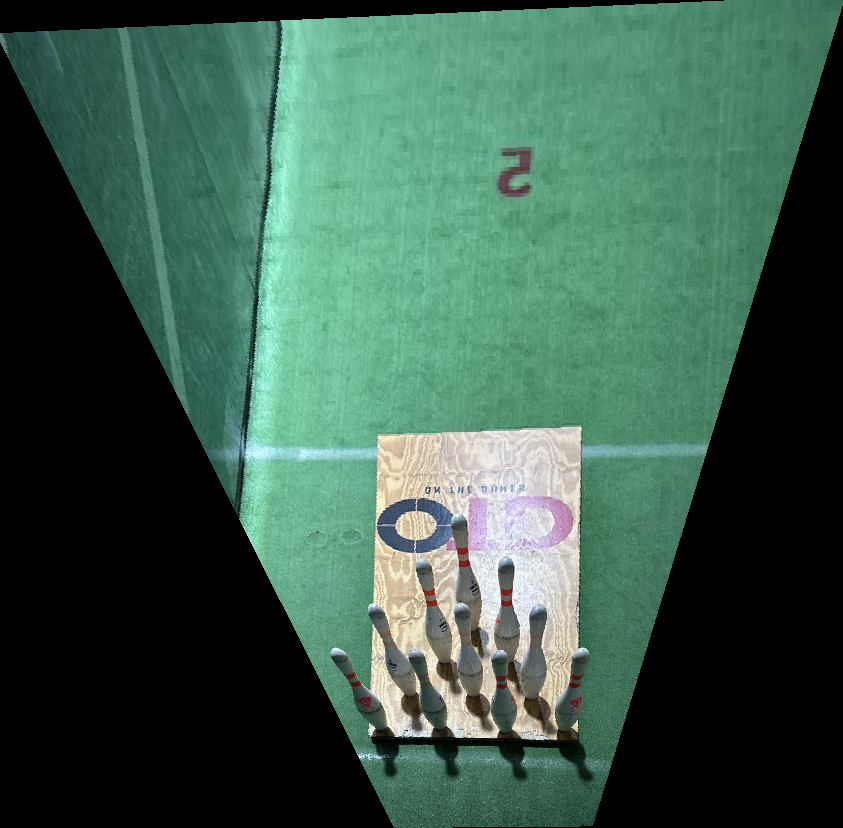

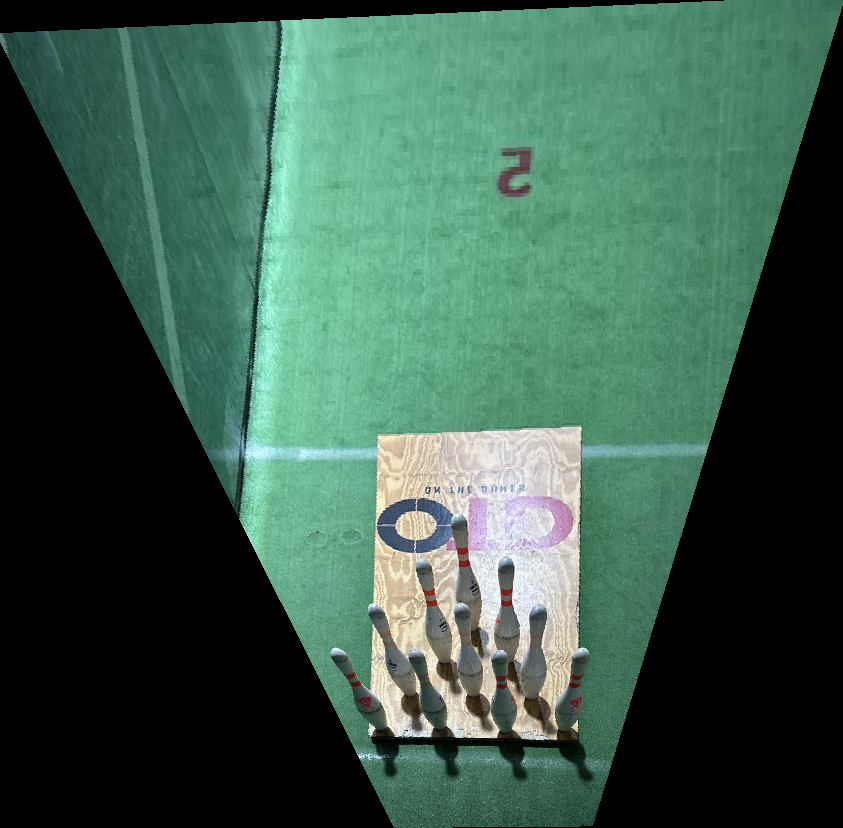

Here, I used my warp function to warp images of bowling pins (from fowling) and a TV with Federer into rectangles. My warp function included an optional result shape parameter to accomplish this. I've attached the images and the corresponding rectifications here.

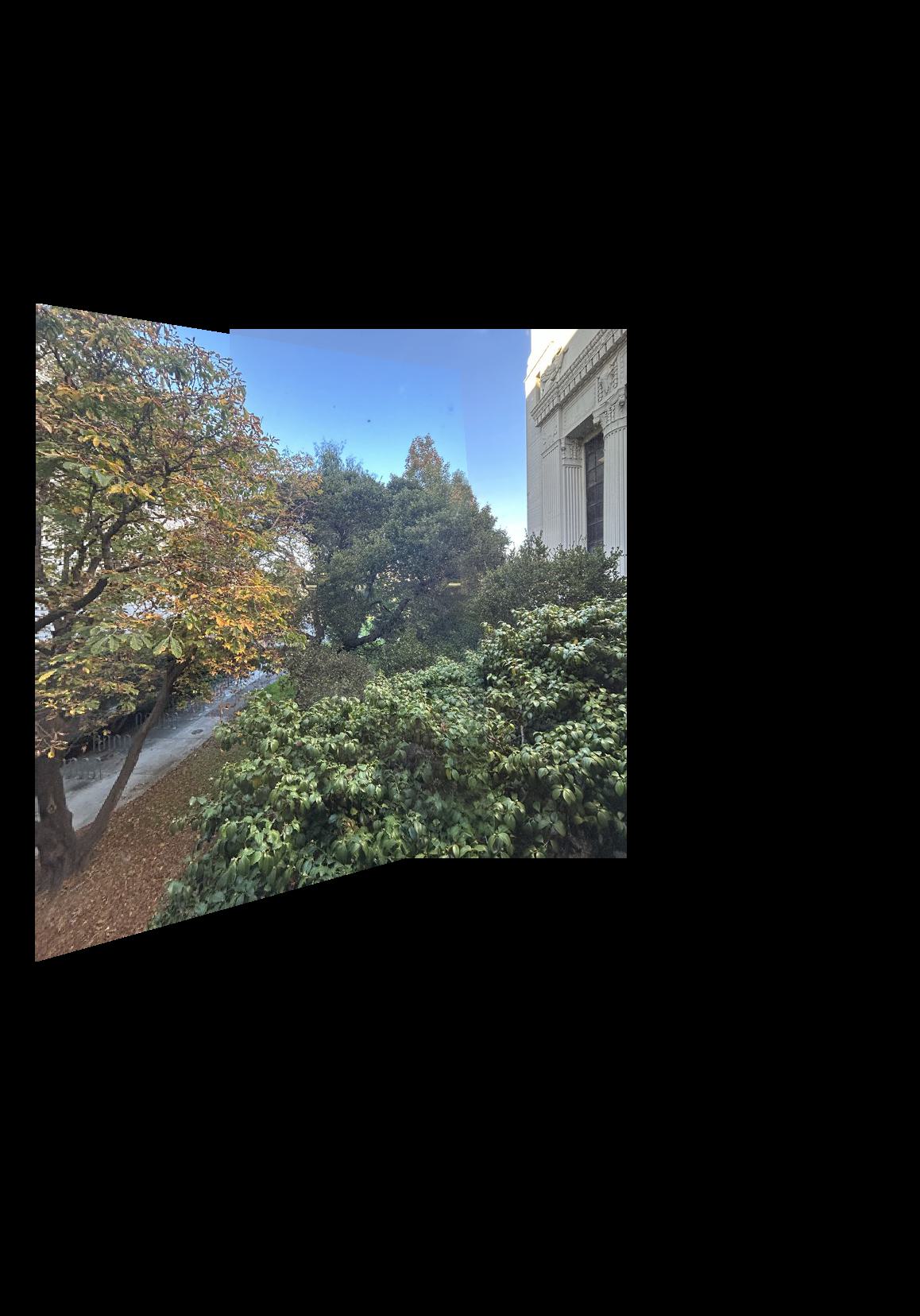

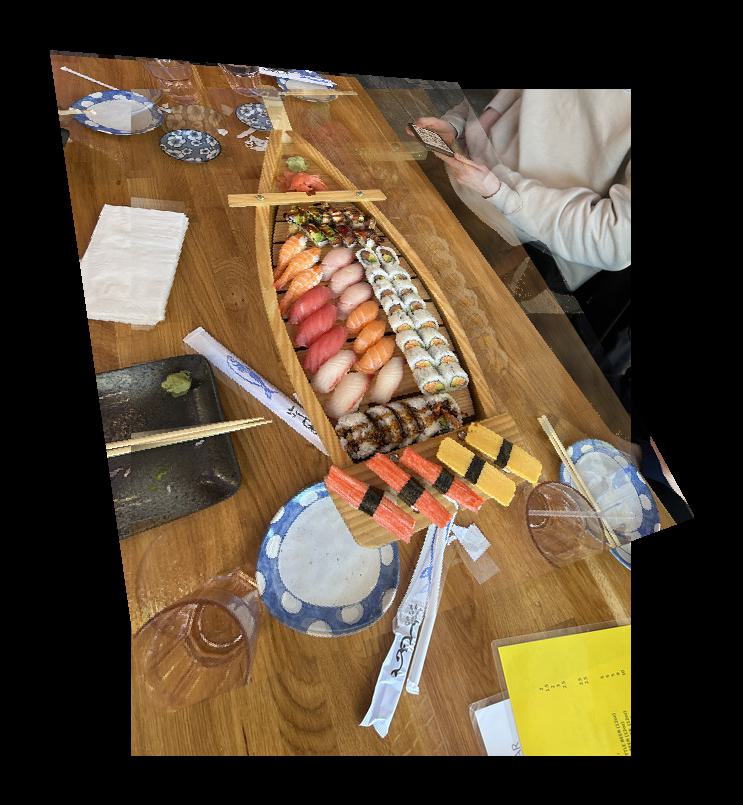

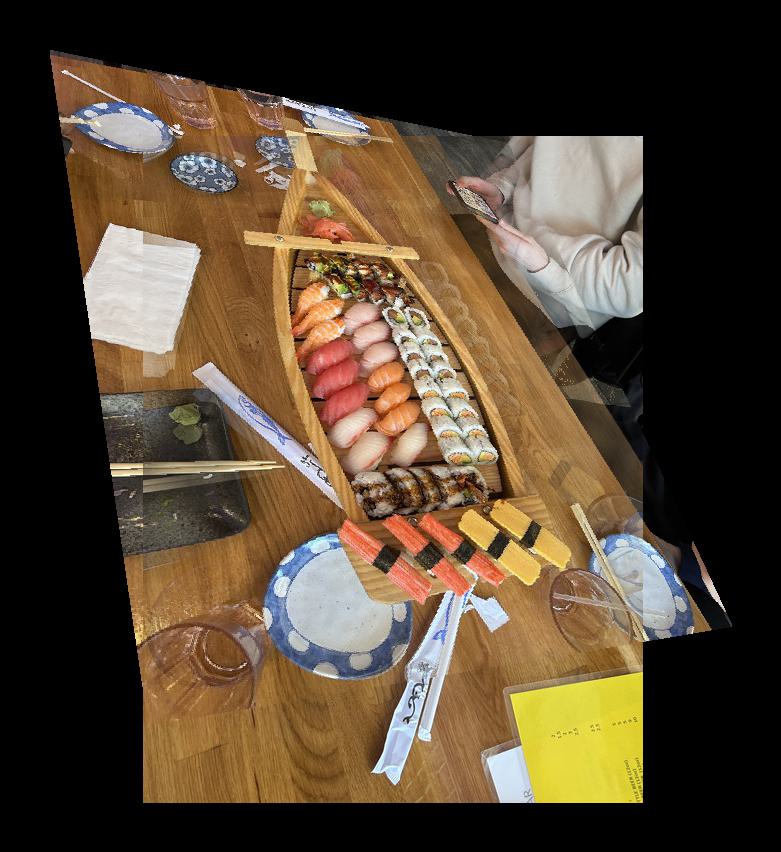

Here I created a function to build the mosaic from two images and did this for three different scenes: SF lunar new year, a bedroom, and sushi. I initially warped one image onto the other and created a blank canvas. I then found the best displacement between the two images and offset the warped image by this displacement. Finally, I put both canvases on each other to create the final canvas, averaging points that were in both images.

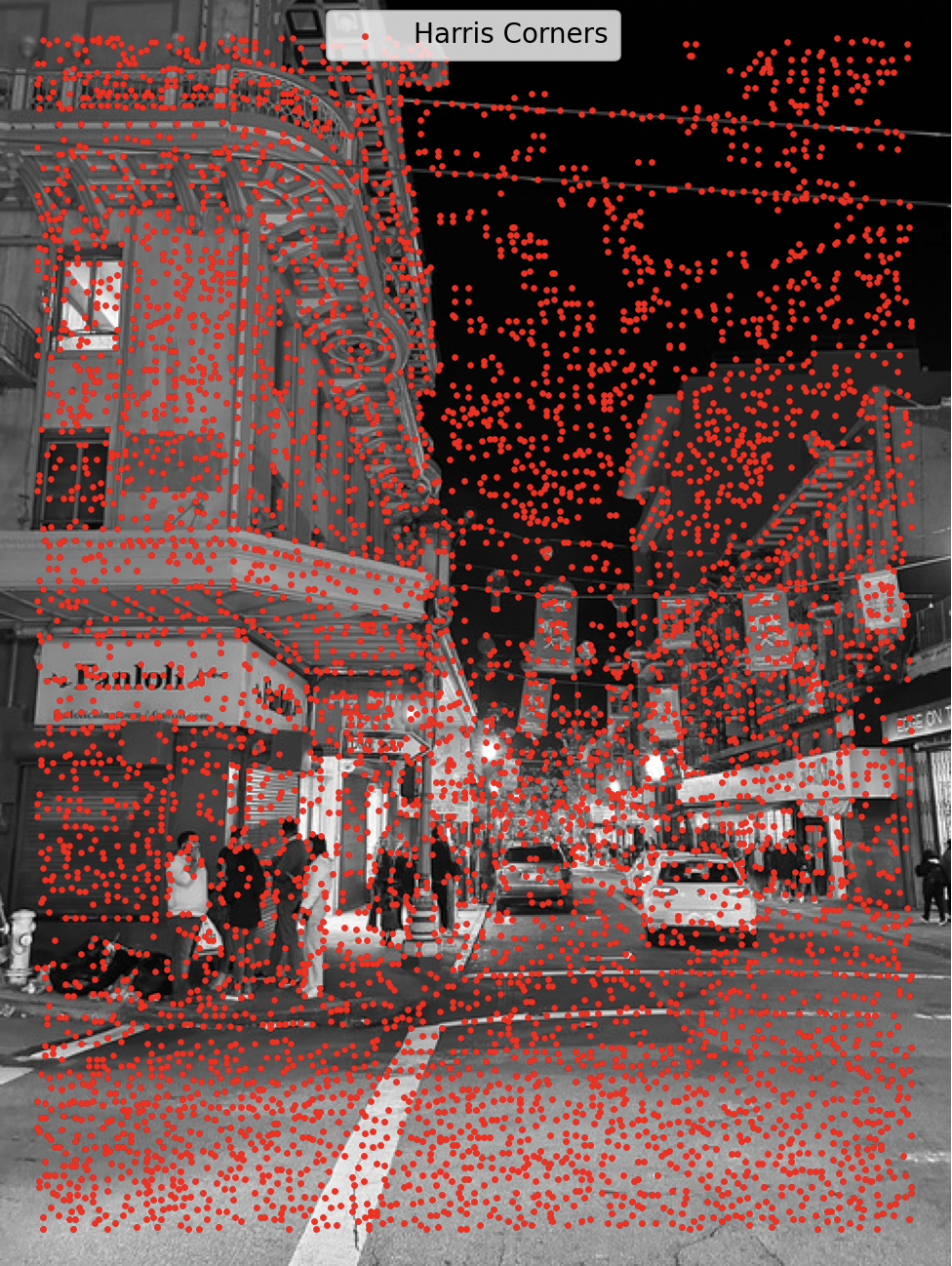

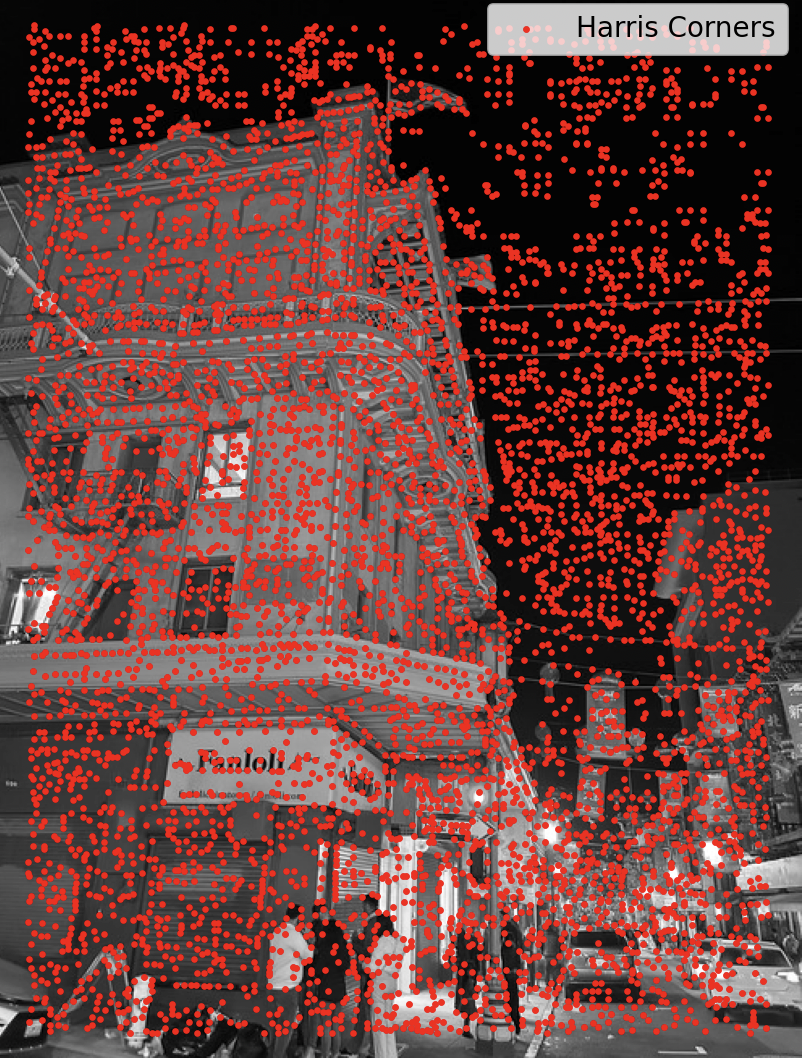

I used the provided function in the project spec for detecting corners with Harris. I have shown a couple images that I used for automatic keypoint detection and blending with all harris corners below.

I used the dist2 function provided for ANMS to compute distances between keypoints chosen by Harris. I used a c_robust value of 1 to ensure that I didn't have neighbors that were both included as keypoints. I have displayed here a couple images that I used. For the most part, I took the top 100 by minimum radius for ANMS.

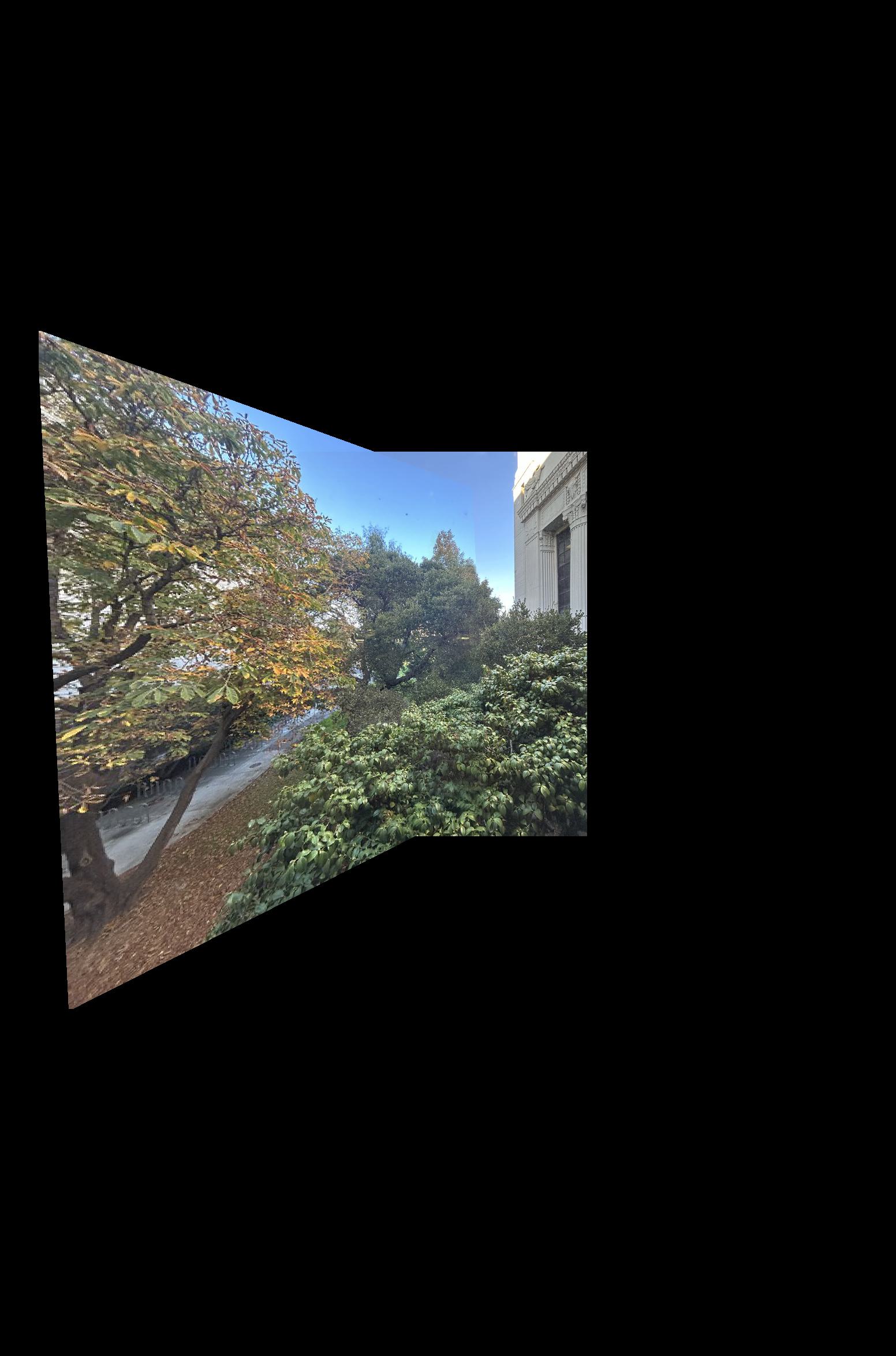

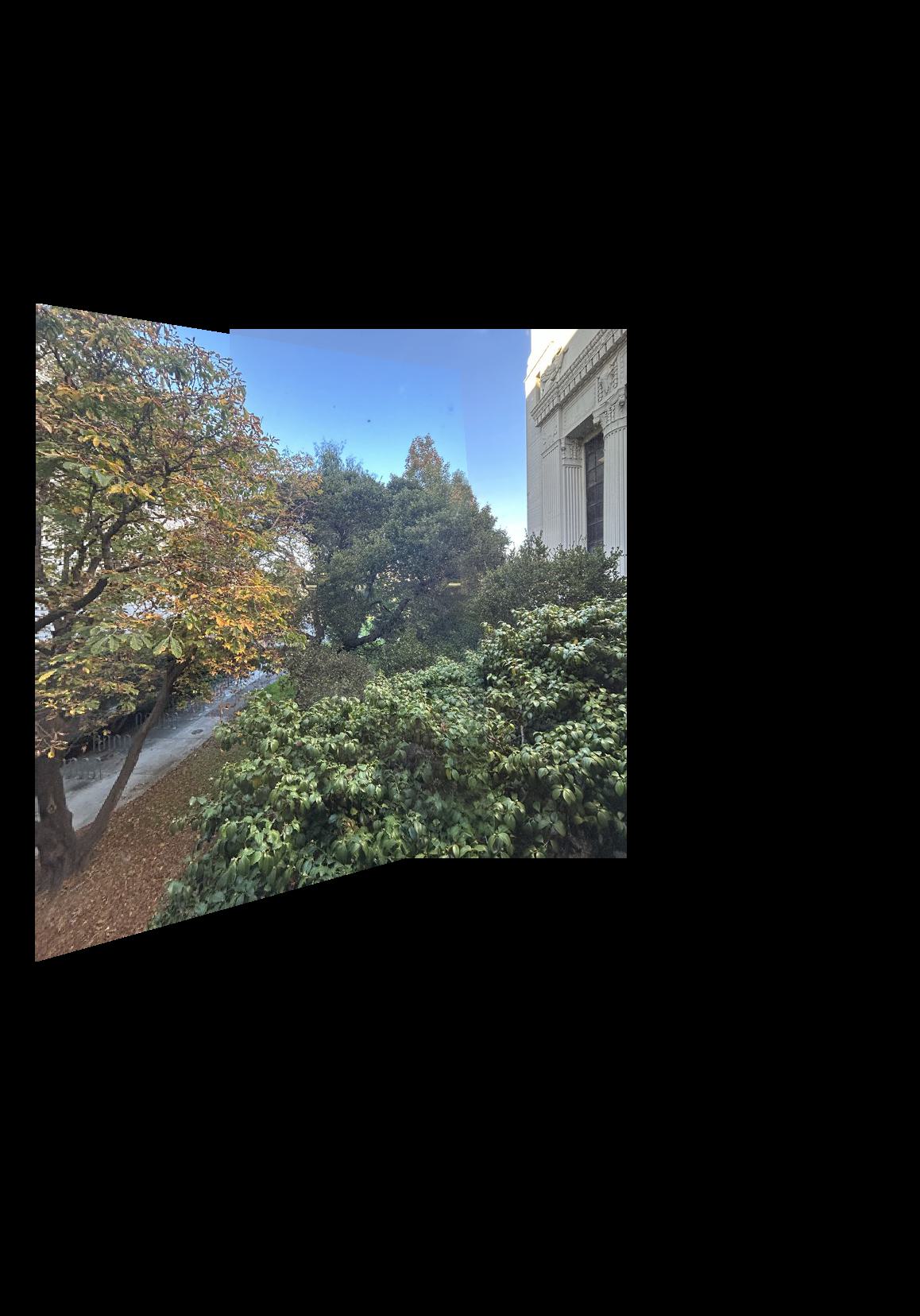

I created the features with normalization and flattening, using a 40x40 grid around keypoints (downsized by scale of 5). I then did automatic feature matching, using threshold of .7 for NN and threshold of 3.5 for inliers. Finally, after computing the best homography with 4-point RANSAC, I used this and made a call to my mosaic function from 4A to create final images as displayed below. Furthermore, I displayed the manually computed mosaics (manually created keypoints) below the automatics for reference.

I used SIFT detection here to detect keypoints and then create features that were rotation invariant. Below I have displayed sushis, along with both normal blending of the sushis (my standard function) and blending with rotation-invariant features. With rotation-invariance, it is far better.

To create a panoramic image with automatic detection of image order, I used a current homography matrix which I updated at each step. To obtain which images to place in which order, I created a matching graph, through which feature strengths detected which images to place in which order. Finally, once this order was determined, the current homography loop would add each image onto the canvas until complete.

I tried morphing images into a circular shape instead of a square. To do this, I created 10 equidistant points on a circle using basic trigonometry in Python. I then had the user choose 10 equidistant points using a circlfy.py file, for then morphing the shape into a circle. Here is a flower without this circular morph and with, as well as sushi bowl.