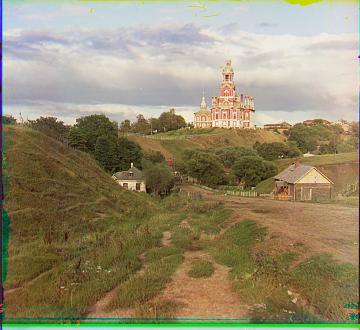

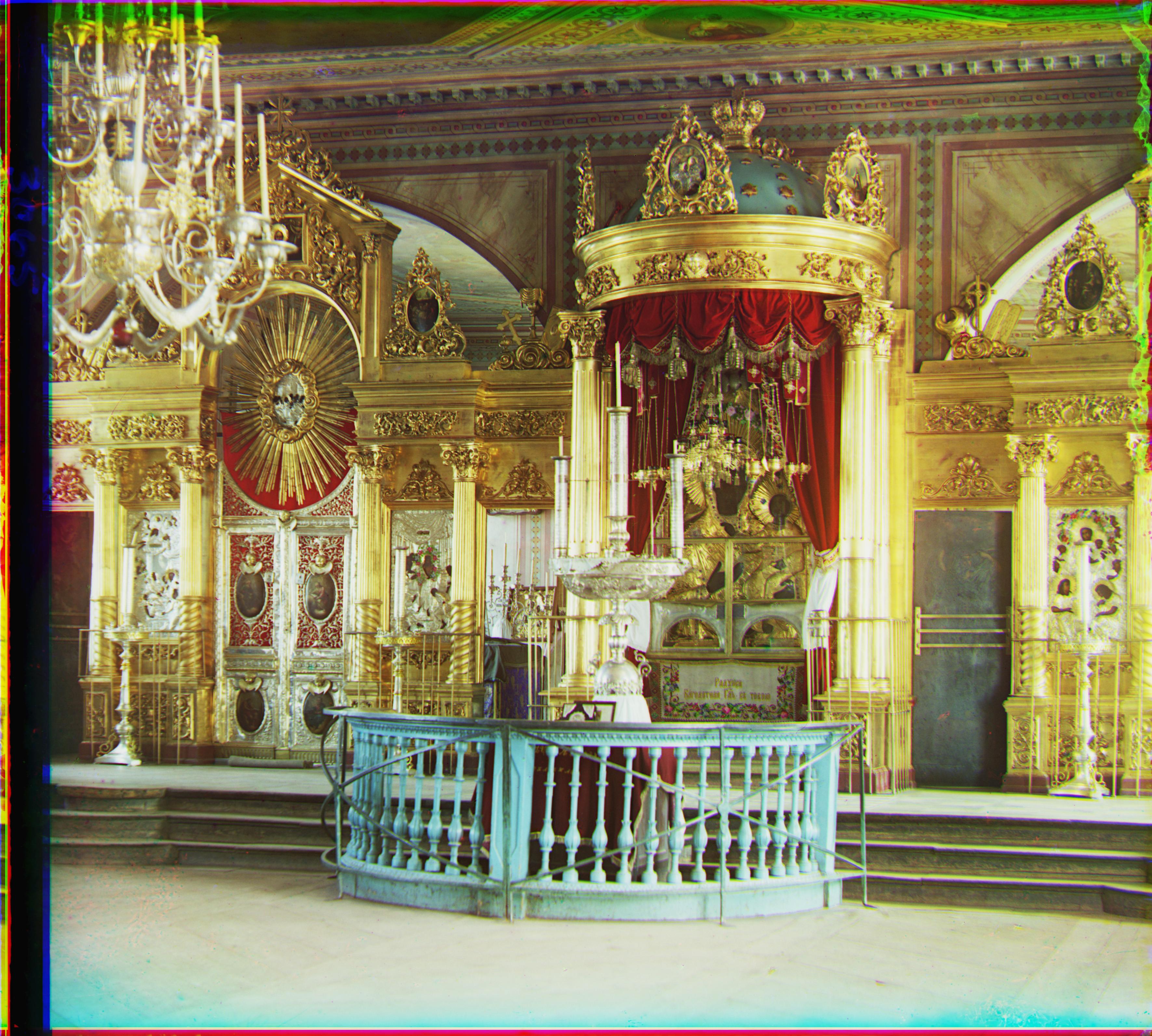

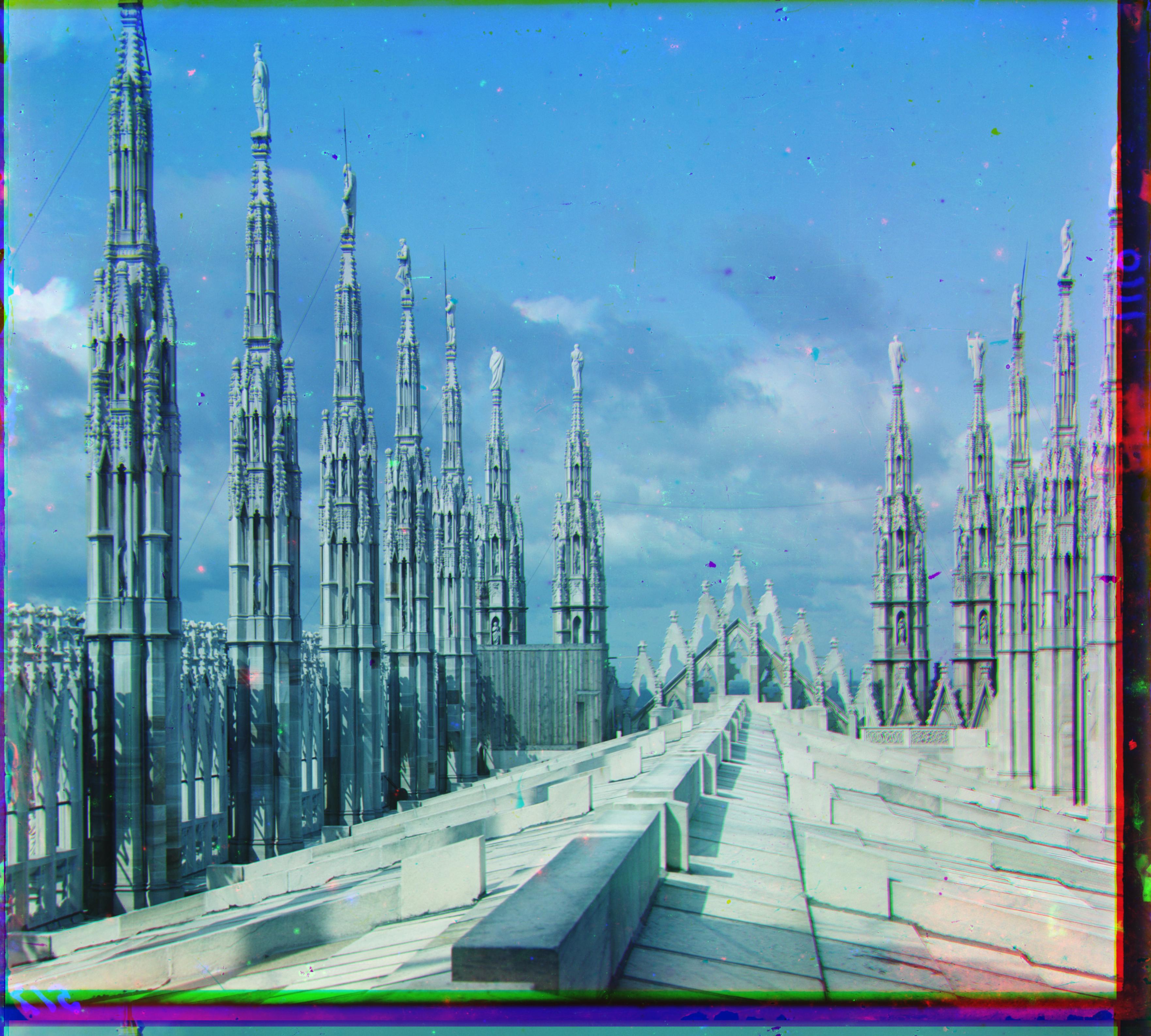

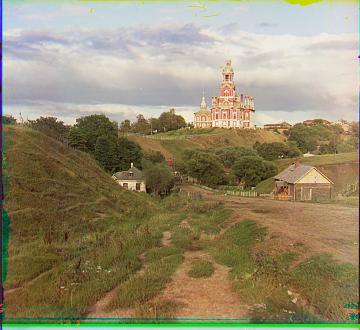

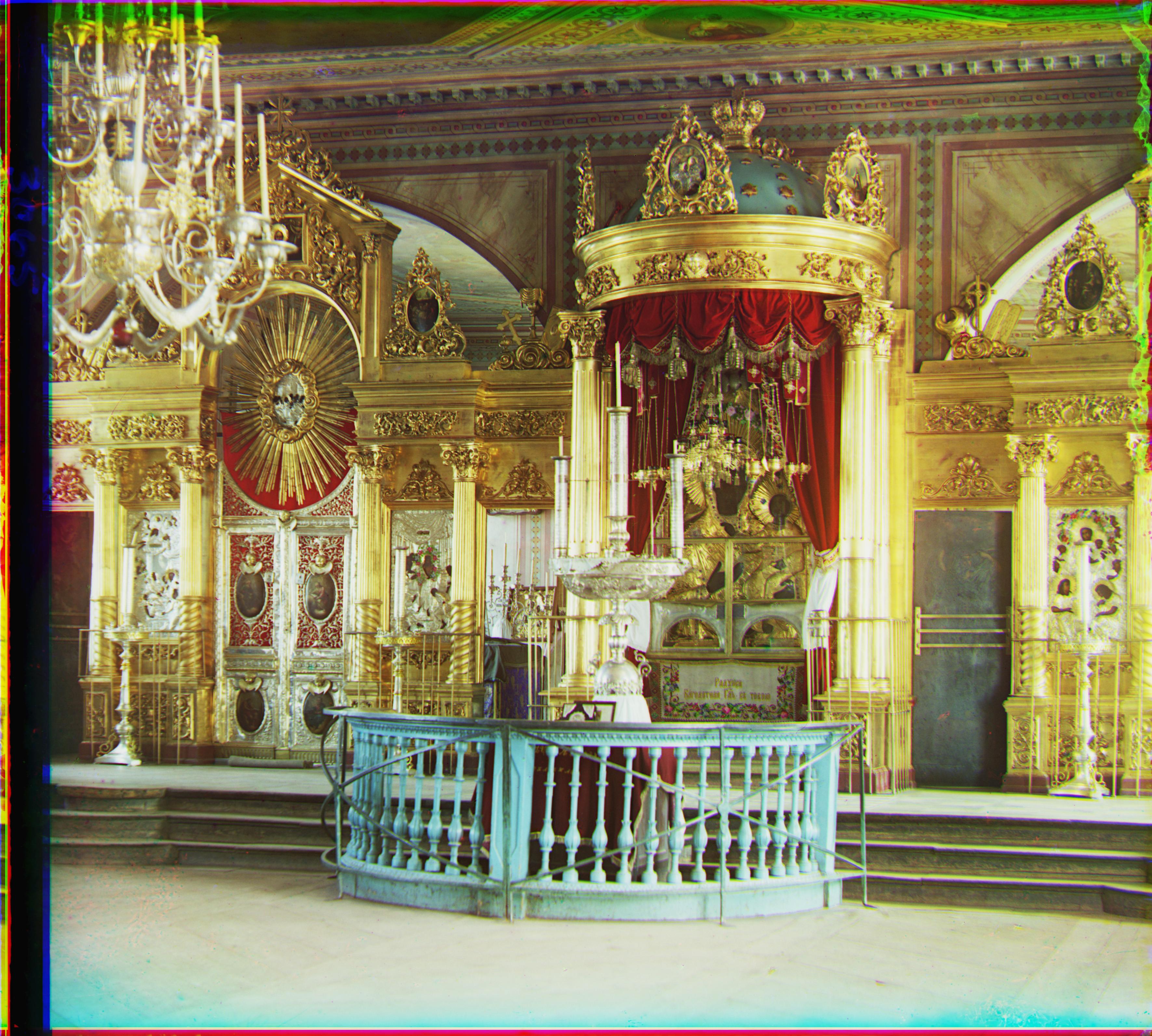

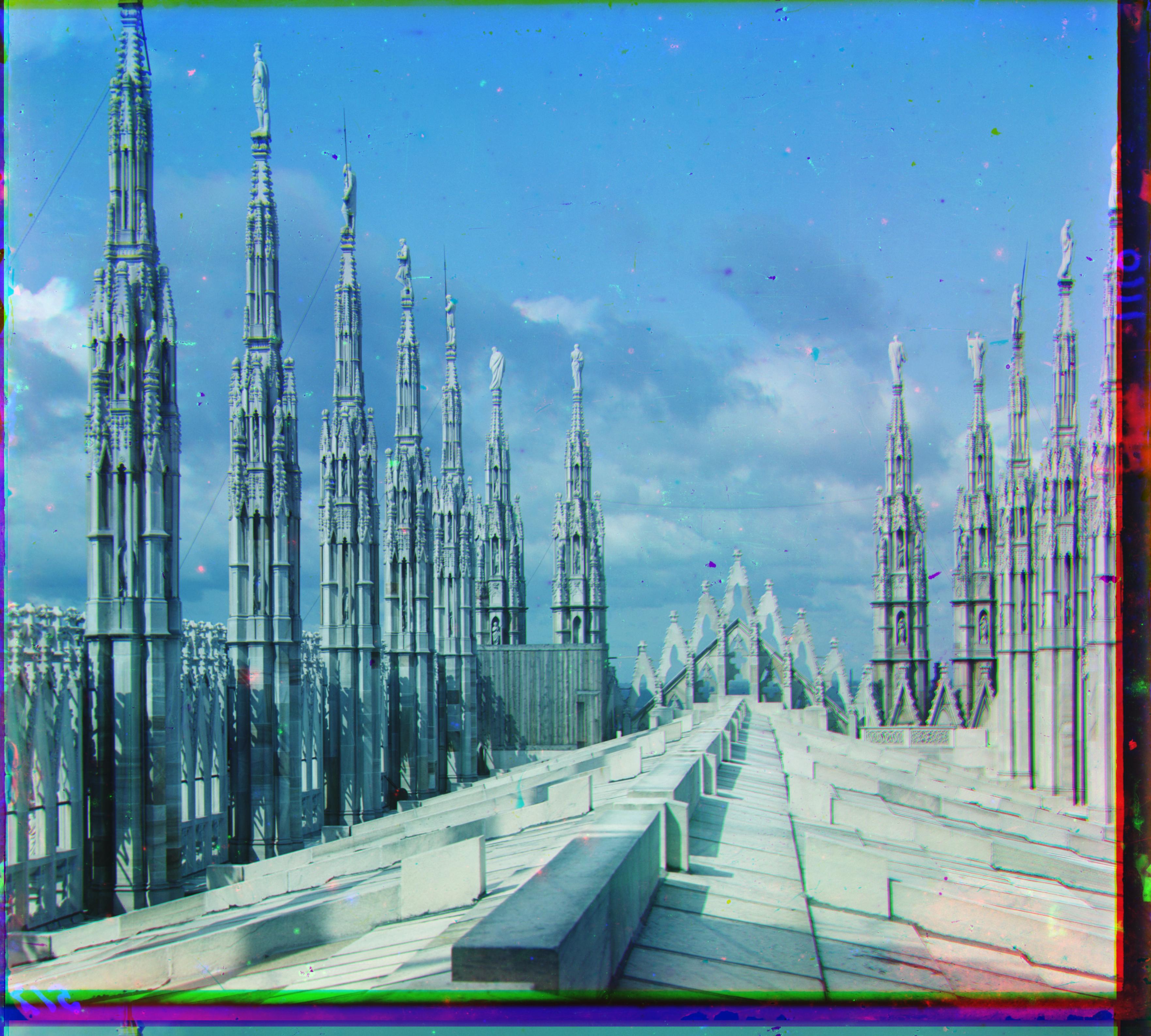

For this project, the goal was to use the digitized Prokudin-Gorskii glass plate images, and, using image processing techniques, automatically produce a full-color image (variety of image sizes and formats - .jpg and .tif). Individually, I began by attempting a naive solution of translating one image onto another, trying all possible horizontal/vertical translation combinations of 30 pixels or less each way. This approach worked well for small images, but for larger images, this approach proved to be insufficient, as larger translations were often required, but searching a larger space of translations would take excessive runtime. In order to mitigate this issue, I leveraged an image pyramid, through which I began by downsampling (averaging) the larger images into images of around 200x200 pixels, and finding the best possible translation for this case. I then gradually downsampled the image less and searched a smaller space of possible translations (previous downsamples already reduced the search space significantly), increasing pixels by a factor of 2 each sample, both horizontally and vertically. Through this approach, I ended up with very clear full-color versions of both small and large images, and the runtime was efficient (O(N^2), where N is number of horizontal/vertical pixels). One issue that I ran into was that originally, my alignment strategy was to convolute images, through which I'd end up taking slightly cropped versions of images, which wouldn't work well with the image pyramid strategy. Instead, I decided to use the convolution to obtain the best shift, and then use this shift to roll images, through which I preserved image size and was able to easily implement the pyramid. Another issue I ran into was when I was using the Euclidean distance between two images as criteria for finding the best shift, this wasn't producing the best images compared to looking at structural similarity, so I switched to ssim instead.

For Bells and Whistles, I decided to attempt automated border cropping for the original images. I tried a variety of techniques, including using the mean pixel value and trying to only remove white borders, and eventually figured out a strategy for removing most of the white and black borders by using the percentage of pixels surpassing some value threshold as a metric for whether a row/column was a border row/column. Above is an example demonstrating the power of this technique on Melons.